Few reputable journalism networks, if any, are using Gen AI for content production. The BBC bans its use in content; internal research showed at a meeeting of academics at an AI symposium at Cardiff University revealed a large swathe of participants were uncomfortable with it.

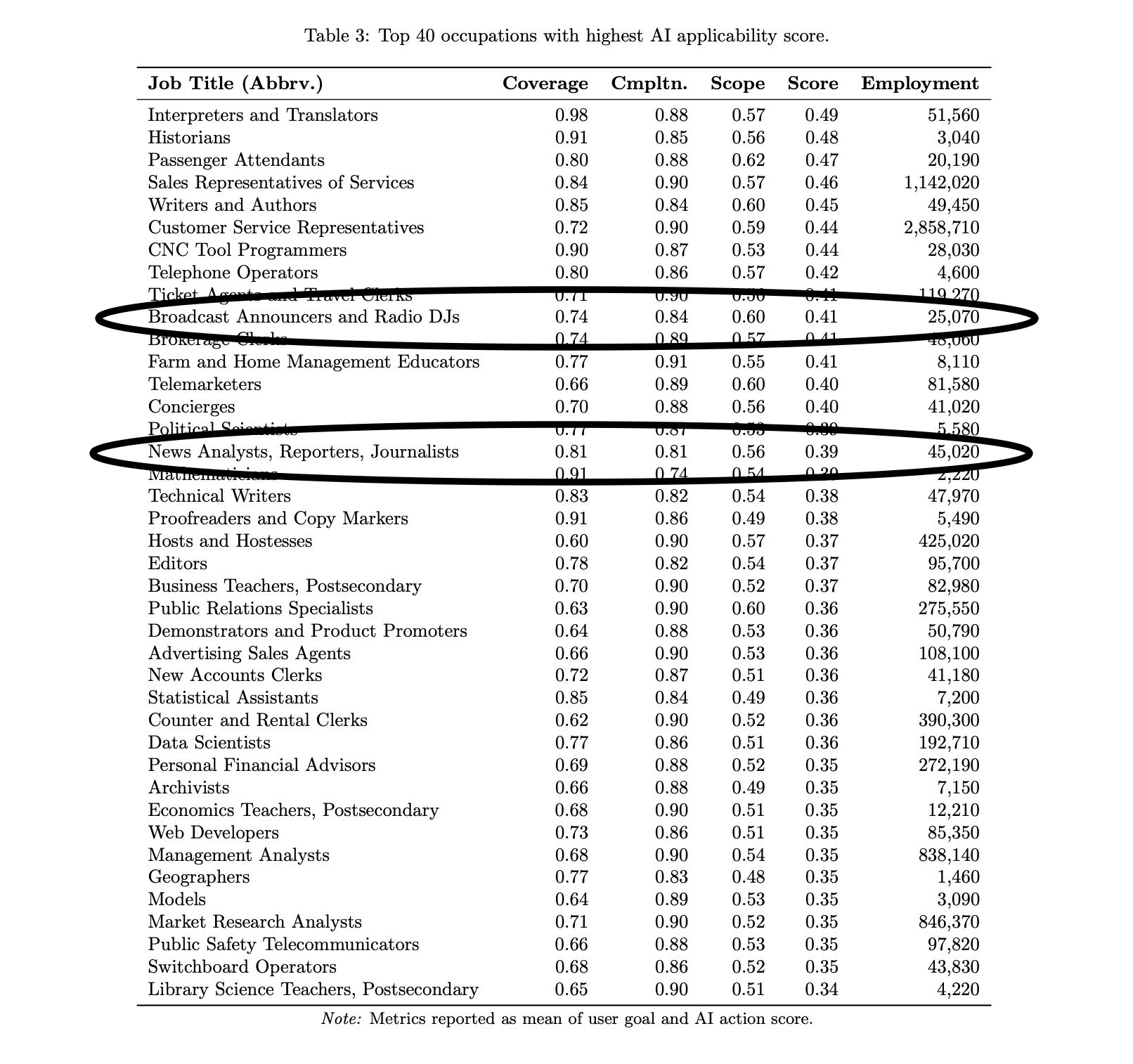

Microsoft's recent report provides a sene of the professions Gen AI has in its sight, and those who can breath easier. The higher you are in the table, the more you're at risk.

Meanwhile, online we've witnessed Gen AI's explosion in factual storytelling and deep fake news. Media organisations such as Netflix, LionsGate and Disney have signalled a willigness to incorporate Gen AI in their VFX workflow.

Journalism's fears are well founded. Without being able to distinguish ethically and technologically between deep fakes and what's real, why engage its use.

But in the absence of leadership or providing public guidance and knowledge there's a huge risk that journalis- media surrenders the initative. In her book When Old technonlogies were New author Carolyn Marvin explains how the birth of a new technology sees a small gathering of people congregate around it and shape its future use.

I've witnessed it with videojournalism and social media tools that followed. It took five years for the BBC to embrace videojournalism from its inception in the UK in 1994.

YouTube's huge success is in part legacy media dragging its feet. Remember too when Kevin Kelly, founding editor of Wired, pitches to ABC bosses in 1999. He ends the meeting by imploring executives to purchase ABC.com which was still on the market.

This year marks 20 years since winning the US's Knight Batten Awards for Innovation in Journalism and being invited to speak at the august National Press Club to explain a vision of web and journalism based in trend extrapolation, lived experience and academic rigour.

In 2004, I demonstrated how mobile could work bringing together a small team. In 2014, from a PhD I wrote about the emergence of a new type of videojournalism, which a number of TV correspondents have said they use.

In 2023, I showed how AI can be used ethically in journalism and factual. The Ghanaian, a short about Ghanaians who emigrated to the UK in the 1950 involved rigorous research of my fathers letters, interviews with neighbours in Ghana, and the use of limited pictures I could find.

The Lavalle Decree and similar actions in Anglophone Africa prohibited Africans from shooting and owning film in the rump of the 1900. Artist illustrations would help scaffold the story.Gen AI made that task simpler.

The UK's Screen Forum and Channel 4' Board of Director invited me to their meetings to share. A producer of BBC Radio 4's The Artificial Human showed an interest in a possible doumentary. Sadly nothing came of it.

There were questions to be answered, principally around trust. How can you trust the author and content as being trust worthy? A short film which employs Gen AI attached to actual audio from a report I produced in South Africa either further complicates this approach or makes clear how the blend of Gen AI and non synthetic media could work.

The media, I explained to a media conference in India in July, were from memory.

By 2030, AI in journalism will be a moot point. D'you remember how Museums barred anyone from taking photos of their exhibits until they could no longer?

Tech has a way of pushing a social necessity. But yes, the issue is trust.

How can you trust the media you're watching

There are a number of methods beyond human perception and the Uncanny Valley perception; that is if something "feels off" or it might just ne be AI-generated.

In July I was asked to review a piece of media by a national company. I explained that while I could apply a critical review highlighting shadows, observe gait, improbabilities, and if it's too good to be true it likely is. Ai's development will soon eliminate these obvious flaws.

These developments won't come from users and platforms interested in click bait content , so what are the options? Thus far there are a number of tech methods that include:

- *Sensity AI

- *Deepware Scanner

- *Hive Moderation

- *BioID

- *and WeVerify (Chrome Extension)

Here I'd like to share an approach, which was conceptualised presenting to UK schools at the Rock Yer Teens conference in 2020. Two years later consulting for Google's EU News Funding Initiative, one of the applicants had encoded something similar.

Understanding the Challenge: A Visual Explanation

Click the icon on the video player to learn more about the author and their work.

Legislation will undoubtedly help protect users. Denmark's ban on deep fakes has good intentions. But government's move slowly and online is porous, so a combination of measures are required.But its incumbent on the very networks that lament AI, to take an initiative to help inform the public of its threats, tucked into media such as news. I'm presently, with a colleague Jose, looking to do some work with RAG and building LLMs for bespoke communities, which I predict will be the norm. So drop me a line if you're interested.

More Articles by David

-

Game On, Reimagining “Nessun Dorma”

Exploring AI in operatic storytelling.

-

The Thirty Who Changed Britain's Media

A look back at media pioneers.

-

BBC GLR Archives: A Rediscovered History

Uncovering lost voices from early 90s radio.

-

History Repeats: Why AI Won’t “End” Filmmaking, Just Evolve It

Insights on AI's impact on filmmaking.

-

My Journey in Videojournalism

Reflections on a pioneering career.